6

/ Canadian Government Executive

// December 2016

Deliverology

abound, as do reports of its overall effec-

tiveness. Our research categorizes the les-

sons learned as follows:

Practical Considerations for

the Transition to the new

Policy on Results

Transitioning to the new Policy on Re-

sults is not just a paper exercise intended

for the Planning and Performance Mea-

surement specialists in Corporate Ser-

vices. Every Program Owner (or Program

Official) needs to be directly engaged in

making clear the results that their pro-

gram contributes to their department’s

priorities and mandate. The linkage back

to the Minister’s mandate letter is imper-

ative.

In addition, other disciplines such as

policy, finance, data management and oth-

ers should be involved to understand the

implications of the new Policy on Results

constructs and to ensure broader buy-

in. Chief Results and Delivery Officers

(CRDO) and Chief Data Officers (CDO)

are in the process of being put in place;

but theirs is a coordinating role, not a dic-

tating one.

Some departments are simply port-

ing their Program Activity Architecture

(PAA) content into the new Departmental

Results Framework (DRF), while others

are taking a “clean sheet” approach to re-

thinking how programs are defined and

structured.

Some are going further – updating their

Logic Models or Outcomes Maps to en-

sure their programs can tell a comprehen-

sive “performance story”. This will then

drive which Key Performance Indicators

(KPIs) are required, and not the other way

around. Then the whole conversation

about targets, results profiles and toler-

ances can begin – with the bigger picture

of program performance in mind.

Finally, although it has likely been said

of every significant change across an or-

ganization, sustained senior management

commitment is critical. It will affect the

programs they oversee, their accountabili-

ties and ultimately their department’s suc-

cess. And that is exactly what Deliverology

is meant to achieve.

G

regory

R

ichards

is the Director of

the MBA Program and of the Centre

for Business Analytics and Performance

at the Telfer School of Management at

the University of Ottawa. Richards@

telfer.uottawa.caC

arrie

G

allo

is a Partner in the

Advisory Services Practice with Interis

| BDO.

cgallo@bdo.caM

urray

K

ronick

is a Senior Manager in

the Planning and Performance Practice

with Interis | BDO.

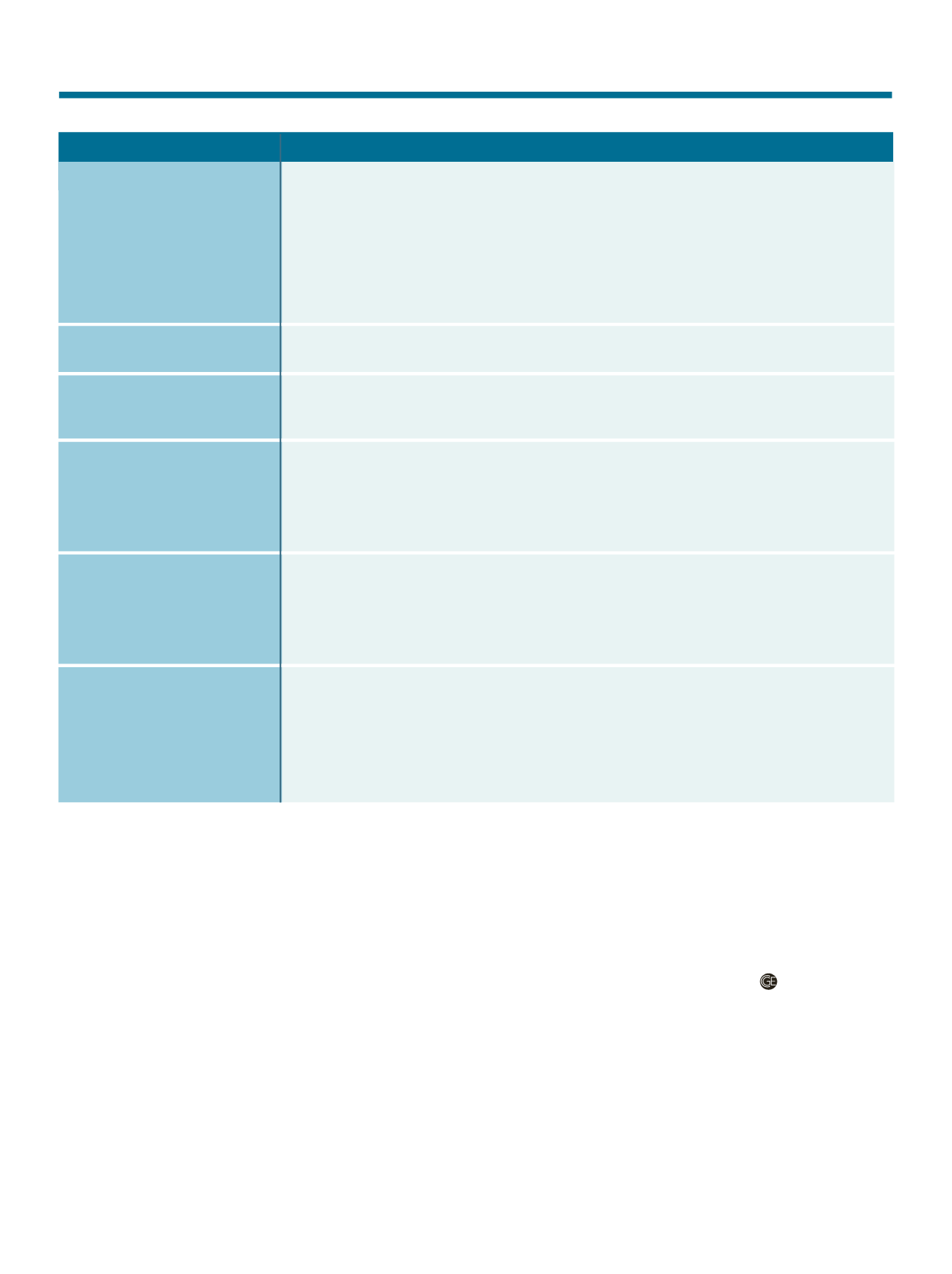

mkronick@bdo.caTable 1.

Summary of Lessons Learned from Deliverology Implementations

Lesson Learned

Comments

Sustained senior management

and political interest in the

policy outcomes are critical

for success.

The implementation will not

survive without credible data.

It is important to create docu-

mented routines for using the

data to drive program changes.

Measurement can create a

tendency to manage towards

the performance standard.

Silo effects can occur.

It’s important to align the

organization’s “philosophy

of management” with the

Deliverology concept.

It’s a truism to claim that nothing succeeds without senior leadership interest. In many

cases, “interest” is often demonstrated by signing off on the project proposal. In the

successful cases of Deliverology implementations, senior leadership involvement went

much further. Politicians and senior departmental leaders were involved in reviewing regular

progress reports and contributed to helping remove barriers to success where needed.

So, “interest” is not simply tacit agreement that the outcomes are important, it also includes

direct, visible and sustained involvement to help remove barriers and allocate resources

where needed.

“Objectively verifiable” data is critical. This avoids arguments about the facts so the Delivery

Unit and program managers can get on with problem resolution / mid-course correction.

Successful implementations had a plan of some sort for what happened to the data and the

analysis: who gets it, who has decision rights, and how to evaluate the impact of changes.

A great deal of the criticism of Deliverology revolves around “gaming” of the system to

meet the established performance standard.

For example, in the education sector, teaching children only what they need to know to

pass standardized tests obtains the result being measured, not necessarily the broader

outcome of knowledgeable students.

Silo effects occur when certain groups are identified for additional attention, based on

pre-established performance targets; the others can, by default, be identified for neglect.

Sticking with the education sector as an example, some children performing far below the

performance standard would be selected for extra help. The others at the standard could

be ignored until everyone has caught up.

Deliverology has also been criticized for its apparent “command and control” culture. There

are different ways of implementing it, however, and highly successful implementations have

been able to push decision-making down to the front lines assuring that people who are

doing the work are empowered to improve it.

Some level of control is always needed, but it is important to match the control mechanisms

with the mandate and the risk profile of the organization.